AI is all around us. As consumers, we all need to understand what AI is, how it impacts our lives, and, most importantly, how to seek the truth in a world where AI is increasingly shaping the information we consume. Recently, there has been a flood of opinion pieces and news articles about the newest versions of AI—think OpenAI’s ChatGPT or Google’s Gemini. The capabilities of these systems are nothing short of impressive, but they also come with risks.

The truth is, AI has been embedded in our daily lives for much longer than many realize. For example, when you’re shopping online and you’re prompted to add “related items” to your cart, that’s AI at work. These recommendation systems are powered by algorithms designed to predict your preferences based on past behavior. Over the last decade, AI technology has evolved dramatically, and now it influences much of what we see, read, and interact with online. But do we truly understand how deep its reach goes?

The Everyday Impact of AI

Anyone who uses social media has been touched by AI, even if they don’t realize it. Social media platforms like Facebook, Instagram, and TikTok are built on algorithms designed to keep users engaged. The longer you stay logged in, the more data the algorithm collects, and the more it learns about your preferences and habits.

Who hasn’t found themselves lost in a social media rabbit hole, scrolling through posts for hours without even realizing it? This is the power of AI-driven recommendation systems at play. These algorithms continuously monitor your behavior—what you like, comment on, or share—and use this data to push similar content into your feed. At first glance, this might seem beneficial. After all, it can help you discover new topics or content you didn’t know you were interested in.

But in reality, this algorithmic targeting is a double-edged sword. The goal of these systems is not necessarily to expand your knowledge or enhance your well-being. It’s to keep you engaged. In many cases, this can lead you down narrow, sometimes even dangerous, informational pathways.

The Problem with AI-Driven Content

Behind every algorithm is a team of programmers. These computer scientists design the systems to maximize user engagement, but they do so without fully considering the quality or truth of the content being shared. In the past, we relied on trained professionals—journalists, experts, and educators—to sift through information, verify facts, and present us with reliable sources. Today, algorithms don’t discern fact from fiction; they simply prioritize content that will keep you clicking, liking, and sharing.

This creates a dangerous environment where anyone with an agenda can manipulate the system. All they need is emotionally charged, click-worthy content, and the algorithm will do the rest, ensuring it reaches the right audience. Whether the content is truthful, misleading, or outright false doesn’t matter. It’s designed to trigger emotions—anger, fear, joy—because emotionally charged content generates the most engagement.

As a result, we’re often bombarded with sensationalized headlines, misinformation, and conspiracy theories, all delivered through the algorithmic channels that we’ve come to rely on. This creates an echo chamber effect, where we’re fed the same ideas, reinforced by our likes and shares, without ever challenging or questioning the validity of the information.

The Need for Media Literacy in the Age of AI

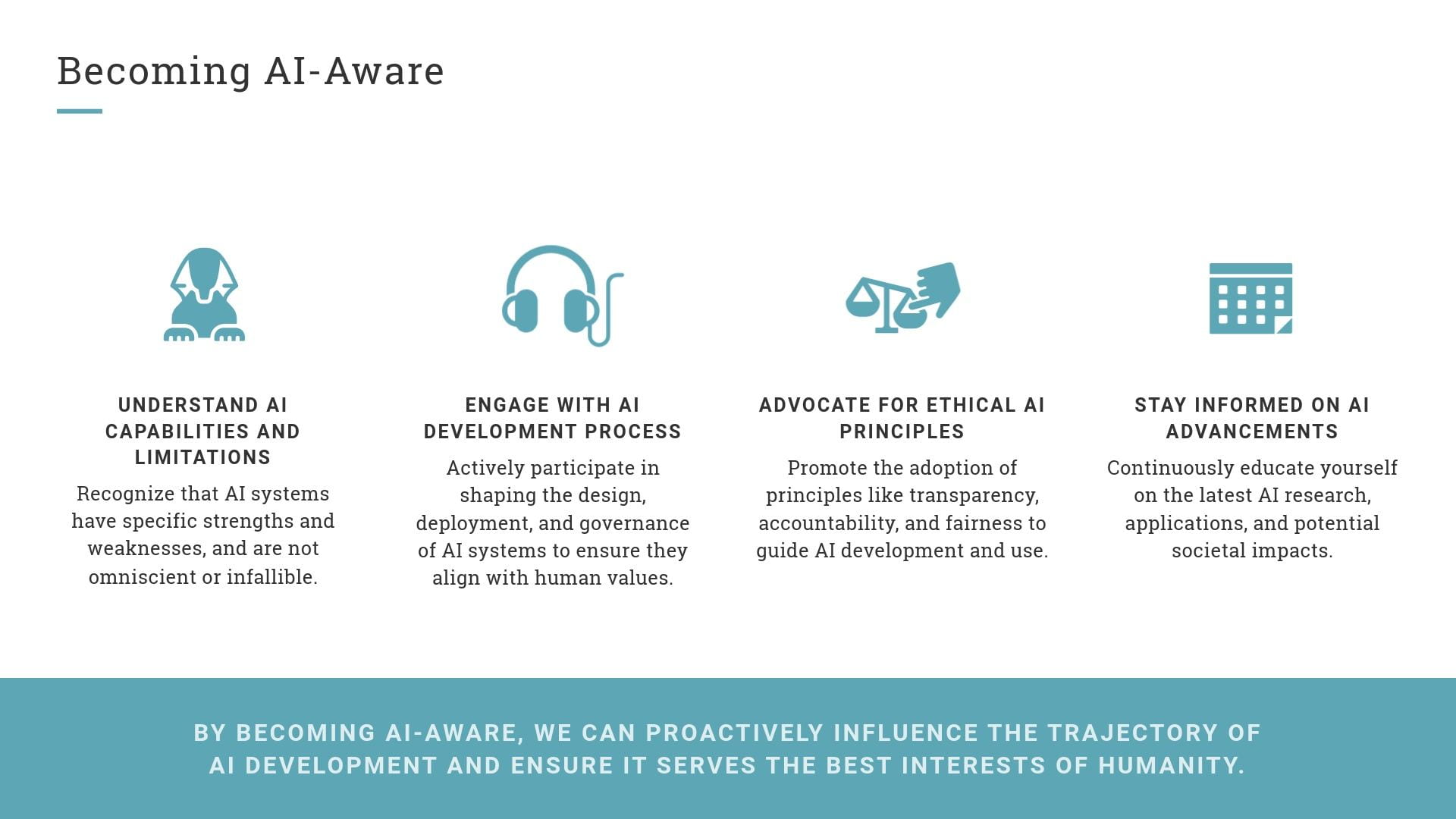

So what can we do about this? The answer lies in understanding the tools at our disposal and becoming more critical consumers of information. In the age of AI, it’s essential to become media literate. We need to ask ourselves where the information is coming from, who is sharing it, and what their motivations might be. The AI-powered recommendation engine might be steering us toward content that aligns with our interests, but that doesn’t mean it aligns with the truth.

We must also be aware of our own biases. Algorithms are designed to cater to our preferences, often reinforcing the views and opinions we already hold. This is why it’s more important than ever to seek out diverse perspectives and challenge our own assumptions.

Furthermore, the responsibility doesn’t fall entirely on consumers. Social media platforms, tech companies, and AI developers must take responsibility for the ethical use of AI. They need to prioritize transparency, accuracy, and fairness in the algorithms they create, ensuring that these systems promote truth rather than simply generating engagement. But until that happens, it’s up to us to be vigilant.

Conclusion

AI is here to stay, and it will continue to shape the way we consume information and interact with the world around us. While it brings many conveniences and opportunities, we must also be aware of its potential dangers. By understanding how AI works and its role in our daily lives, we can make more informed decisions, challenge misinformation, and push for a more ethical, responsible use of this powerful technology.

In the end, AI can be a tool for good—but only if we are smart about how we use it. The next time you find yourself scrolling endlessly through your social media feed or reading an article that just *feels* too sensational to be true, take a step back. Ask yourself: Who created this content? What are they trying to achieve? And, most importantly, is this information really true?

By questioning what we consume and seeking the truth, we can better navigate the world of AI and ensure it works for us—not against us.

*The following books inspired this post: “Nexus: A Brief History of Information Networks from the Stone Age” by Yuval Noah Harari, and “Hope for Cynics: The Surprising Science of Human Goodness” by Jamil Zaki.